Project

Personal Website

The website this project is hosted on. A project created as a home for all my development work and tinkering...

Initially as I was looking to host my website I started searching in the world of cloud providers. Of course I checked out the big three first and saw their offerings.

My initial specification was that I would be dealing with a Django site with PostgreSQL for the database, so I was looking at just getting a small virtual machine to host it all, as the expected traffic would be rather low. Another requirement for me was to balance spending and keeping myself from vendor locking too much. Usually most offerings that are cheaper or even free end up being features that only can be configured for that platform, such as a specific flavour of serverless functions.

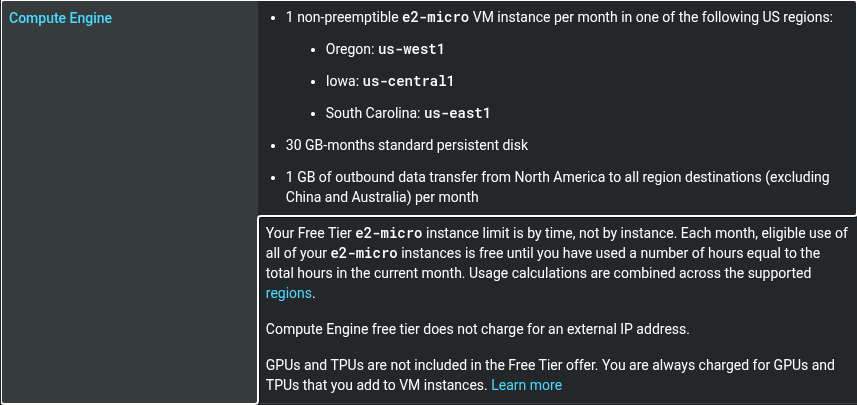

My initial look was at Google Cloud Platform(GCP) which had a decent offering, and someone had recommended it too me. The initial problem with this was that only free VM offerings where based in the States and I something that at least was in Europe.

Consequently this lead me too checking out Akamai formerly known as Linode, as an alternative. Discovering a solid £200 credit for two months helped me use this as my first platform to host my website. Compared to the big three this platform is much simpler offering straight forward, but solid options. The VMs are fairly priced and easy to setup while offering very good CPU burst capabilities. This allowed me to host my entire site on one docker stack in order to keep the infrastructure requirements simple.

resource "linode_instance" "web" {

label = "django_instance"

image = "linode/fedora39"

region = var.region

type = "g6-standard-1"

authorized_keys = [data.linode_sshkey.key.ssh_key]

swap_size = 512

}

Those first two months on this platform were great, but I was seeking too completely eliminate costs, so those credits could only get me so far. Preferably I would have liked to stay on the same platform for eternity, but requirements have to be adhered.

My next foray was with AWS, which I considered before finding the options overwhelming giving me a sort of selection paralysis. Simpler solutions needed to be found before, so I could take on this behemoth. Luckily Akamai scratched that itch for me giving me that initial experience needed.

One of my biggest gripes with this platform was its CLI; an absolute mess of a tool where finding the functionality took way too long. There is an understanding that you get better over time with command line tools, but this tool was absolutely cluttered and required me to search for things for way too long. Of course there is an understanding that you have used the web interface to mentally connect things to the CLI first, but many of these tools that I have used before just had better external documentation and internal documentation. Luckily I was using Terraform to deploy my Infrastructure, so that the command line was mostly used to handle some S3 tasks.

Jumping over from the previous provider I started experiencing some performance issues, as the free tier VMs definitely were a few steps below too what I was using before. Overhauling my approach was necessary here, as otherwise I would struggle to even serve the website too a small amount of users. The low amount of RAM caused my Docker build system to crawl to a halt, making me transfer that to a local build instead and I decided to use the RDS service for my database.

resource "aws_db_instance" "postgres" {

identifier = "${var.project}-postgres"

instance_class = "db.t3.micro"

allocated_storage = 20

max_allocated_storage = 20

engine = "postgres"

engine_version = "15"

auto_minor_version_upgrade = true

db_name = var.db_name

username = var.db_user

publicly_accessible = false

db_subnet_group_name = aws_db_subnet_group.postgres.name

vpc_security_group_ids = [aws_security_group.database.id]

tags = {

Name = "${var.project}-postgres"

Environment = "Production"

Project = var.project

}

}

After some more tweaking and assigning proper caching usage to the server this whole system was running with minimal costs until the first 12 months were over...

Time has moved on and I needed another provider in order to keep hosting costs as cheap as possible. This time my eye was Microsoft's platform where I had good and bad things about before. Initial impressions were that it focused heavily on their own products like Windows and NET specific configurations. In most cases it was clear that there was an emphasis on that, but it was mostly in the way that they would split their offerings into two.

Initial setup was fairly simple with me wanting to mirror the Infrastructure I had used on AWS. One problem was that the larger free tier VM offering was out of stock, so I had to settle with one vCPU and limit options further. This had me breaking up the Docker stack I was using even more and opting for more serverless options. Luckily it was only a few tasks I had to convert in order to get it working again and that meant I could remove task queue and worker from the VM freeing up precious RAM and CPU usage to the website.

resource "azurerm_service_plan" "service_plan" {

name = "${var.project}-service-plan"

resource_group_name = data.azurerm_resource_group.rg.name

location = data.azurerm_resource_group.rg.location

os_type = "Linux"

sku_name = "B1"

}

resource "azurerm_linux_function_app" "rss_feed" {

name = "${var.project}-linux-function-app"

resource_group_name = data.azurerm_resource_group.rg.name

location = data.azurerm_resource_group.rg.location

storage_account_name = data.azurerm_storage_account.storage_account.name

storage_account_access_key = data.azurerm_storage_account.storage_account.primary_access_key

service_plan_id = azurerm_service_plan.service_plan.id

https_only = true

site_config {

application_stack {

python_version = "3.12"

}

always_on = false

worker_count = 1

app_service_logs {

disk_quota_mb = 35

retention_period_days = 1

}

}

app_settings = {

AUTH_USER = var.auth_user

AUTH_TOKEN = var.auth_token

APPINSIGHTS_INSTRUMENTATIONKEY = data.azurerm_application_insights.logging.instrumentation_key

}

}

This leads us to today where I am still using this configuration today. Experience definitely has been built up and core understanding of hosting infrastructure requirements has grown in me. Time will keep moving and I will probably have too look for a another solution in the future, but for now Azure will be the home of dkasa.dev. Cloudflare's new offerings are definitely on my mind which I already use for their DNS offerings, so that might be the next stop of this journey.