External

Hacker News

Great further discussion about database migrations.

Bad migrations have caused me many hours of headaches as I had to discard old data due to bad versioning and consistency. At some point I didn't even know that you could migrate old data effectively. Luckily Django has exposed me to such tools due to having builtin migrations since the 1.7 update almost a decade ago now.

Most migrations tools including Django's follow a declarative schema management model where you provide different states to a solver and it will try mould existing schema into what actually is declared. Django migrations follow a more sequential order, so previous migrations will have to succeed first in order for the next one to succeed, but some systems follow a more asymmetric procedure, similar to how dependency managers like Poetry, Conda or NPM would resolve dependency/versioning issues.

Even with such tools if you are not procedural enough with them you will still end up breaking databases somehow. Fragile states probably have something to do with this as some data models/structures will have terrible technical debt and will need to be converted and maintained at some point. Skill/experience level when doing this kind of procedure does show and I need to find ways of improving future migrations that I will be performing.

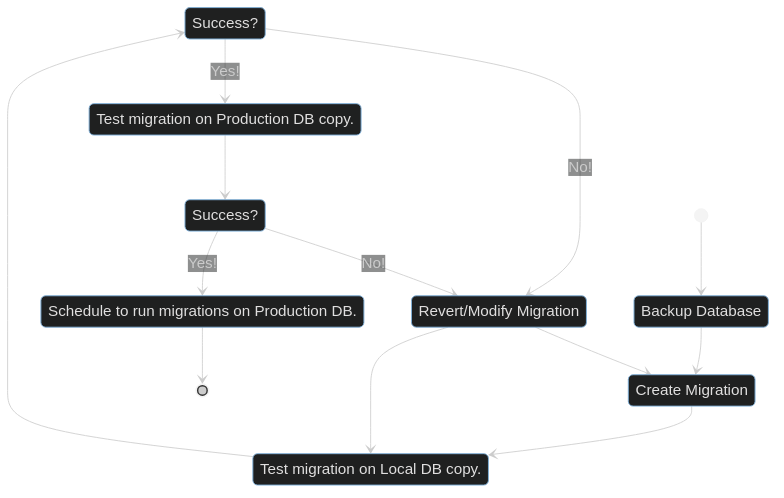

Creating a system from this is simple enough as its close to the core code pipeline driving for a test driven methodology.

Even though this kind of system might seem a bit overkill. Double testing clarifies some issues with edge cases as you can prepare a local database for these quiet easily, since production will not be guaranteed to check all possible data states.

In larger team settings this would have to follow a prioritising queue structure due to the amount of migrations that could be coming in, since multiple migrations from different sources will show conflict at one point or another.

Of course I only have very crude knowledge on migrating busy production databases, but the final step usually revolves around a rolling release as you will stop database writes while allowing some reads and migrate parts of the database over or having a maintenance window if the product allows for it.

There are also tools such as Flyway or Liquibase for automated cross database management following a more managed state like IaC tools such as Terraform for managing the details if you have to constantly jump between different platforms, frameworks and databases.